Table of Contents

Looking at Lung X-Rays are one of the methods doctors use to gain some insight on an imement heart failure is by detecting excess fluid in the lungs. MIT researchers developed a machine learning tool that can offer some help in that regard. The program can detect severe cases with an extremely high level of accuracy. The team that made it are very hopeful that it will assist with other conditions, as well.

AI is Leading The Way

MIT’s CSAIL (Computer Science and Artificial Intelligence Lab) performed the research. This comes along with several other promising machine learning and AI tools that are reshaping the medical diagnosis landscape. Modern computing power is enabling these algorithms to pour medical imaging data and spot critical, hard to notice changes in the the body’s condition that humans just can’t see. This opens up some truly exciting possibilities.

This could mean picking up missed cancer diagnoses. Old CT scans or Lung X-Rays are more useful now. It also means we could detect other things like Alzheimer’s years before they become visible to doctors. Computers simply have better attention to detail. AI can also be used to analyze ECG (Electrocardiogram) results to help doctors learn which patients are most at risk of heart failure. This is a welcome change to the medical industry. This is made possible by identifying dysfunction in the left ventricular.

Lung X-Rays Are an Effective Tool For Diagnosis

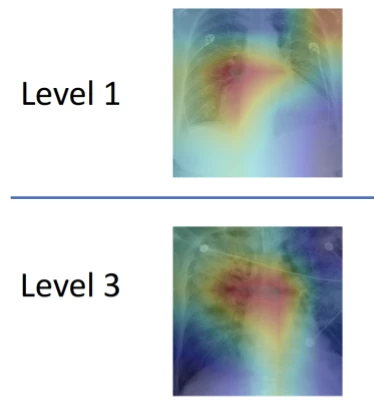

Doctors make use of lung X-rays to make an assessment of fluid build-up. This is especially important for those at risk of heart failure. Subtle features are what these assessments are based on. Inconsistent diagnoses and treatment and often what this leads to.

The team used over 300,000 Lung X-rays and their radiology reports to train it’s algorithm. To do so, they had to develop linguistic rules to ensure that the data was analyzed correctly.

Our model can turn both images and text into compact numerical abstractions from which an interpretation can be derived. We trained it to minimize the difference between the representations of the X-ray images and the text of the radiology reports, using the reports to improve the image interpretation.

Geeticka Chauhan

AI simply agreed with incorrect humans 50% of the time, more than likely. Sometimes, the AI was perceived to be incorrect. This is probably when the AI was correct and the human generated data it was compared against was wrong.

If you liked this article, you should check out this research paper describing how the technology can be accessed.

Related post: An Artificial Intelligence-based system is able to detect osteoarthritis before you develop symptoms