Table of Contents

Los Altos, CA — Artificial intelligence acceleration focused chip-maker Cerebras Systems has unveiled what is currently the world’s largest processor, a 46,225 square millimeter, 2.6 trillion transistor chip.

What’s the world’s largest CPU?

The title for the world’s largest CPU goes to Cerebras Systems’ WSE and WSE2 engines. Both Wafer Scale chips are 46,225 mm². Where the first Wafter Scale Engine (WSE) was based on TSMC’s 16nm process and featured 1.2 trillion transistors, its successor, WSE2, is built using TSMC’s 7nm technology, bringing WSE2’s transistor count to 2.6 trillion. The WSE chips are the world’s largest CPUs. They are 56 times larger than the next largest chip, nVidia’s A100 GPU.

Cerebras Systems has unveiled the world’s largest CPU, which is also the most powerful processor, the Wafer Scale Engine Generation 2.

Cerebras’ original WSE (Wafer Scale Engine) was already pretty impressive considering the fact that it was already the most powerful AI processor and was already a feat of cutting-edge engineering in just about every way you can think of, but now Cerebras has gone even further. The first Wafer Scale Engine featured a mind-boggling 1.2 trillion transistors built on a 16nm process. This enabled the world’s largest CPU to house 400,000 cores in its 46,225 square millimeters of silicon. Stop and think about that for a moment. Four hundred thousand cores. Wow. Included in that was 18 GB of ultra-high-speed on-chip memory. Connecting it all is a blazing fast memory interface fabric that supports up to 9 PB/s of memory bandwidth while using 15kw of power!

Cerebras didn’t stop there with the world’s largest CPU

In a bid to one-up itself, Cerebras has already released the second version of WS1, the Wafer Scale Engine 2.

WSE2 is simply amazing. This massive chip has an astonishing 2.6 trillion transistors. The architecture has not changed much since WSE1. They took a WSE1, slightly tweaked it, and ran it through TSMC’s 7nm process to make this new chip. With over double the transistor count comes a proportional rise in core-count to a breath-taking 850,000 cores. The best part is that Cerebras has the massive new chips up and running in its labs right now.

This is literally the largest chip that you can make out of a single silicon 300-mm silicon wafter. At the Hot Chips 2020 conference was where we saw Cerebras reveal this new chip. Wafer Scale Engine Generation 2 truly is the world’s largest CPU. The die-size chip is much larger than the next largest chip. 56 times larger.

The world’s most powerful processor

Compare the Wafer Scale Engine 2 to the second-largest chip in the world, the nVidia A100. The nVidia graphics processor contains only about 21 billion transistors and it only has an 815 mm² die area. With near 70 times the cores as the nVidia A100, WSE2 (Wafer Scale Engine 2) is truly massive. I mean, just look at this thing. Usually, when you see pictures like the one above, it’s of many, many processors but this is just one. WSE2 has 10,000 times the built-in memory, and that memory is 10,000 times faster. This makes for a chip that is, overall, 33,000x faster than the next best thing.

Now that we are seeing Moore’s law die, it’s taking around 3 to 4 years for chip transistor-counts to double. The number of transistors on a chip used to double every 10-18 months, but that changed quite a few years ago. Meanwhile, the demand for AI workloads has increased 300,000 fold, doubling every 3 months. That’s 25,000 times faster than mores law at its peak. And well, if you can’t get more transistors per mm² then you need to work with more silicon. A lot more silicon.

This world’s largest CPU is going to be hard to out-do

You literally can’t make a CPU bigger than this. Coming in at 46,225mm, the size of this chip is constrained by the physical dimensions of a 300-mm silicon wafer. Coming in at 46,225 mm² (71.7 in²), its area is restricted by the size of the 300-mm silicon wafer it’s made from. The World’s Biggest Chip can only be made as large as the wafers that they are produced on.

To put the numbers into context, nVidia’s A100, the second-biggest chip, measures 826mm² and contains only 54.2 billion (0.0542 trillion) transistors.

CS-1 is powered by the Cerebras Wafer Scale Engine – the largest CPU ever built.

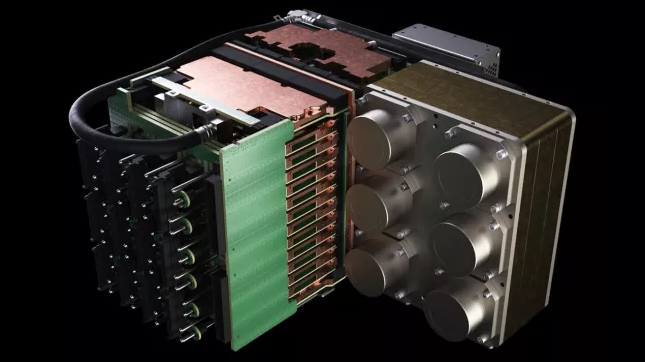

First-gen WSE (Wafer Scale Engine) systems will house the chip in an innovative system. The chassis will provide robust power delivery and advanced cooling systems to feed the power-hungry slab of silicon. The larger the chip, the more power it consumes, and the amount of power that a chip uses is directly proportional to how much heat it produces. It will also make sure it doesn’t catch on fire. Remember, these are standard size parts at this point. So, the Wafer Scale Engine will still occupy the same exact amount of die space. It’s the same size with more than double the number of transistors and cores. Also, Cerebras will increase the amount of on-chip memory for WSE2. It will also bolster the chip’s interconnects to even further increase on-chip bandwidth.

What are the disadvantages of the world’s largest CPU?

Larger computer chips use a lot more power and are more difficult to keep cool. Compared to smaller chips, the world’s largest CPU needs specialized infrastructure to support them. This will be a limiting factor as to who can practically use these devices. Artificial Intelligence is definitely where the money is right now. With 850,000 cores, the world’s largest chip is made for AI development.

Is This The First Purpose-Built AI-Chip?

No. Cerebras is nowhere near the first company to develop chips specifically to power AI systems. This is, however, by far the largest and most powerful Artificial Intelligence chip, ever.

Google developed TPU (Tensor Processing Unit) chips back in 2016 to accelerate certain software including Google’s own language translation app. Now Google is selling TPUs to third parties interested in buying AI chips. In 2017, Huawei announced that they added an NPU (Neural Processing Unit) to its ‘Kirin’ line of chips. The Chinese company sells to smartphone manufacturers. NPUs help to increase the speed of the calculation of something called ‘matrix multiplications’. Matrix multiplications are a type of arithmetic that is commonly associated with AI tasks.

Featured Image Credit: Cerebras